A few years ago, Alex Yu and Amit Jain got together to start a company that would allow people to capture objects in 3D using their smartphones – no additional equipment required. At the time, Yu was an artificial intelligence researcher at UC Berkeley while Jain was an Apple employee creating Vision Pro’s multimedia experiences.

their company, Luma, released a smartphone app in 2021 that quickly gained traction — attracting millions of users (just over two million as of press time). But now, as genetic AI technology floods the channels, Yu and Jain hope to evolve Luma into something bigger — and, with any luck, better — than they originally envisioned.

Luma announced today that it will begin leveraging a compute cluster of ~3,000 Nvidia A100 GPUs to train new AI models that can—in Yu’s words—“see and understand, show and explain, and ultimately interact with [the] world.”

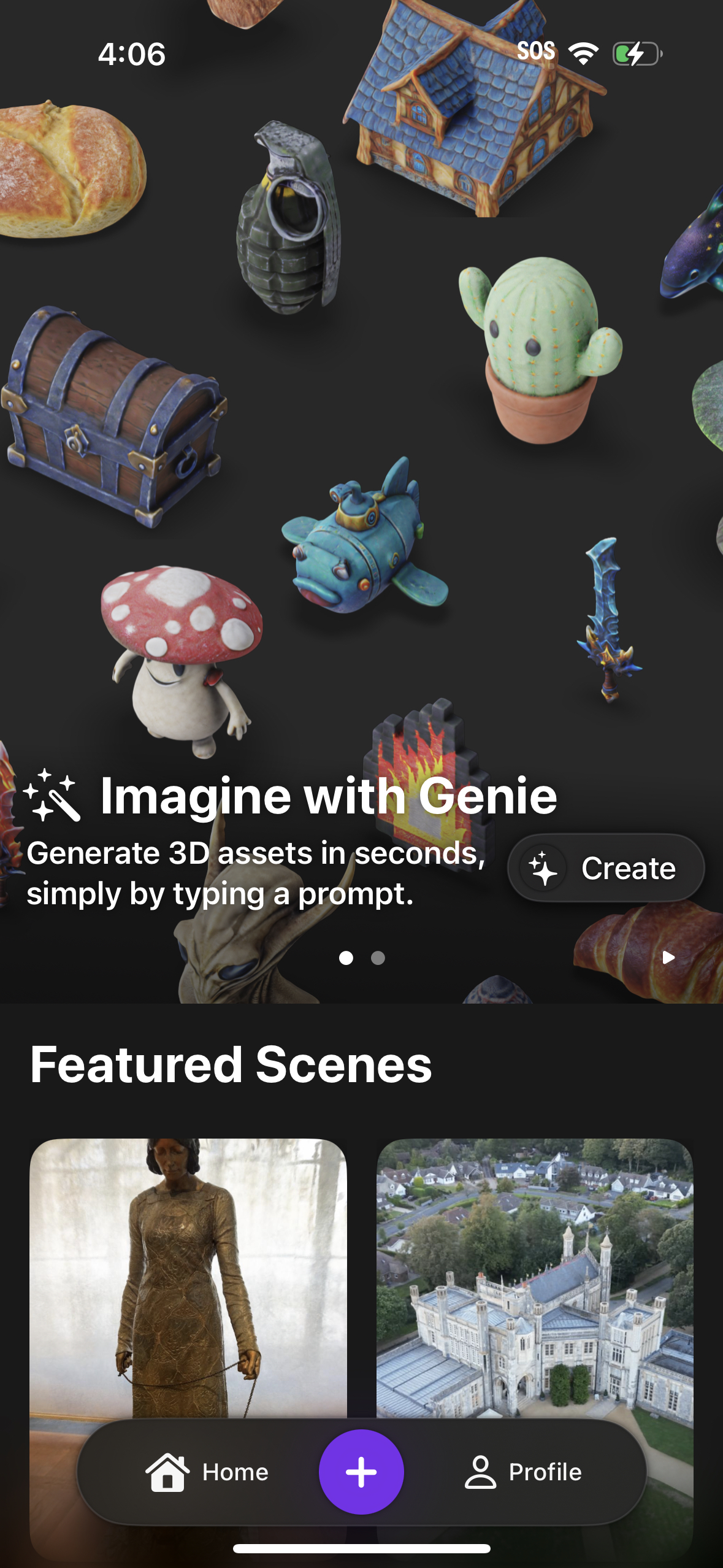

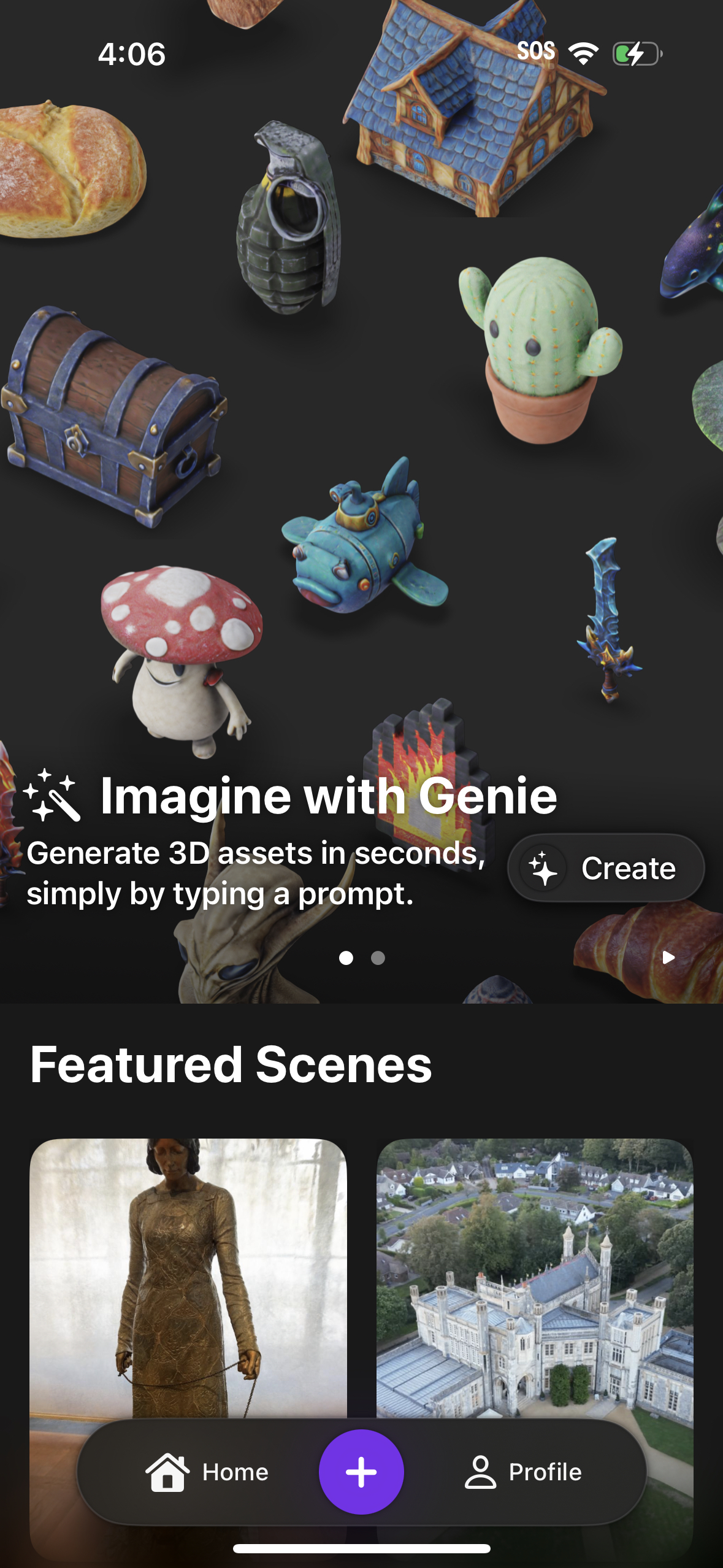

The first phase of this project involves creating models capable of creating 3D objects from textual descriptions. Luma released one such model on the Discord server earlier this year, called the Genie. Next will be the development of “next-generation” AI models that address what Yu describes as the “uncanny valley” problem in current-generation GenAI.

“We believe that multimodality is critical to intelligence. To move beyond language models, the next unlock will come from vision,” Yu told TechCrunch in an email interview. “[However,] AI needs to get much smarter to deliver the potential that people see in it.

To realize this vision vision (pardon the pun), Luma raised $43 million in a Series B round with participation from Andreessen Horowitz among other old and new backers. The round values Luma at between $200 million and $300 million, according to a source familiar with the matter. Luma’s war chest now stands at more than $70 million.

Luma’s current focus — launching AI models that generate 3D models — is an increasingly competitive space. There are object creation platforms like 3DFY and Scenario, as well as startups like Hypothetic, Kaedim, Auctoria and Mirage. Stability AI recently released a standalone 3D modeling tool, as did its newer venture Atlas. Even incumbents like Autodesk and Nvidia are starting to dip their toes into the field with apps like Get3D, which converts images into 3D models, and ClipForge, which creates models from text descriptions.

Image Credits: Luma

So how will Luma’s tools stand out? Loyalty mostly, says Yu.

“Current models are all trained on 2D images and, when asked to generate scenes, confuse spaces, bodies and movements,” he said. “It’s very difficult to create anything coherent and usable in the first few attempts, limiting where you can use the outputs… [We’re bringing] on the most advanced genetic photorealistic technology[ies] in an intuitive application.”

This is promising given that it is early in Luma’s ambitious new roadmap. An improved version of Genie is released today, but future, more capable AI production models are a long way off.

But Luma isn’t wasting any time, planning to double its workforce to 24 by the end of next year while assembling a server cluster running on “thousand” GPU models. Maybe he will finally make progress. time will tell.

Image Credits: Luma

“We are growing the team in genetic research, engineering, design and AI product in order to bring our vision to life and we plan to significantly accelerate the pace here after this round,” said Jain. “With Genie, for the first time creating 3D things at scale was made possible with artificial intelligence, and it grew to 100,000 users in just four weeks… [But we want to] to create much more capable, intelligent and useful visual models for our users.”