Adobe says it’s building an AI model for video creation. But it’s not revealing exactly when this model will be released — or much about it other than the fact that it exists.

Offered as a response to OpenAI’s Sora, Google’s Imagen 2 and models from a growing number of startups in the nascent AI video space, Adobe’s model — part of the company’s expanding Firefly family of AI products — will pave the way Premiere Pro, Adobe’s flagship video editing suite, sometime later this year, Adobe says.

Like many AI video creation tools today, Adobe’s model creates footage from scratch (either prompt or reference images) — and brings three new features to Premiere Pro: object addition, object removal, and genetic expansion.

They are pretty self-explanatory.

Insert Object allows users to select a part of a video clip — the top third, say, or the bottom left corner — and insert a prompt to insert objects into that part. In an update with TechCrunch, an Adobe representative showed a photo of an actual briefcase filled with diamonds created by Adobe’s model.

Image Credits: AI generated diamonds courtesy of Adobe.

Remove objects removes objects from clips, such as boom mics or coffee cups on the background of a shot.

Remove objects with AI. Note that the results are not perfect. Image Credits: Adobe

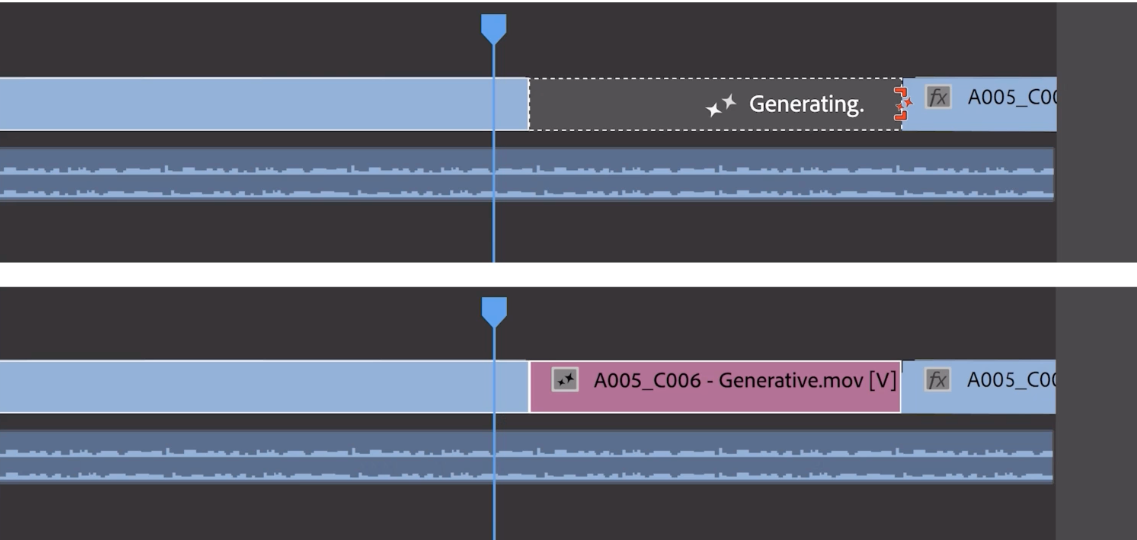

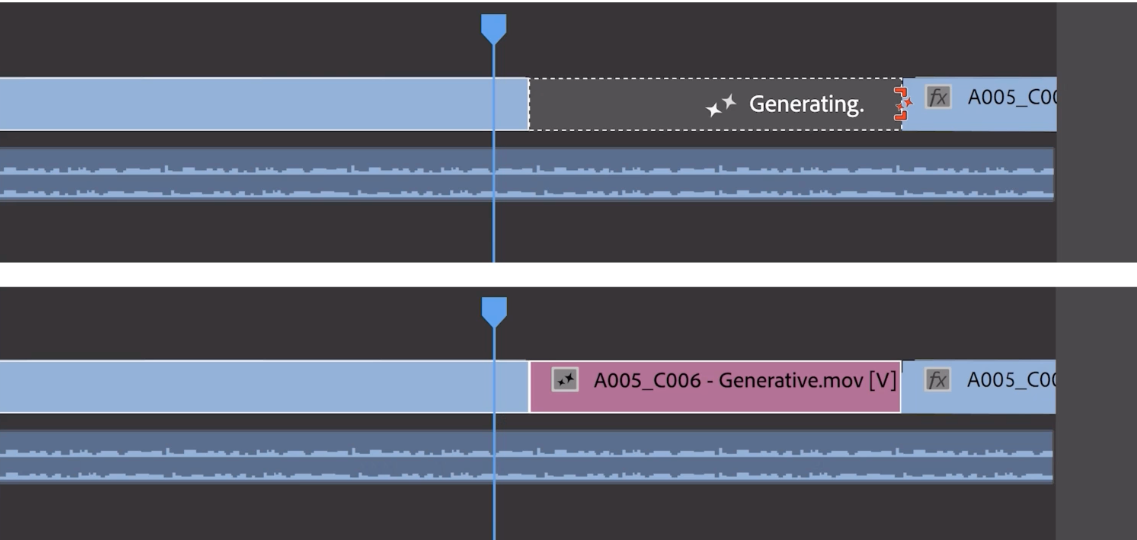

As for genetic stretching, it adds a few frames to the beginning or end of a clip (sadly, Adobe wouldn’t say how many). Genetic expansion isn’t intended to create entire scenes, but to add frame buffers to sync with a soundtrack, or to hold a shot for an extra beat—for example, to add emotional weight.

Image Credits: Adobe

To address the fear of deepfakes that inevitably emerge around AI creation tools like these, Adobe says it’s bringing Content Credentials — metadata for identifying AI-generated media — to Premiere. Content Credentials, a media provenance standard supported by Adobe through the Content Authenticity Initiative, was already in Photoshop and is a component of Adobe’s Firefly image-generating models. In Premiere, they will indicate not only which content was created by AI, but also which AI model was used to create it.

I asked Adobe what data – images, videos and so on – was used to train the model. The company would not say, nor would it say how (or if) it compensates contributors to the dataset.

Last week, Bloomberg, citing sources familiar with the matter, mentionted that Adobe pays photographers and artists on its stock media platform, Adobe Stock, up to $120 to submit short video clips to train its video production model. The fee is said to range from about $2.62 per minute of video to about $7.25 per minute, depending on the submission, with higher quality material commanding correspondingly higher rates.

That would be a departure from Adobe’s current deal with Adobe Stock artists and photographers, whose work it uses to train its models to create images. The company pays those contributors an annual bonus, not a one-time fee, based on how much content they have in stock and how it’s used — though a bonus that’s subject to an opaque formula and not guaranteed from year to year .

Bloomberg’s reports, if accurate, depict an approach in stark contrast to that of AI video competitors such as OpenAI, which are he said to have scraped publicly available web data — including videos from YouTube — to train its models. YouTube CEO Neal Mohan recently said that using YouTube videos to train OpenAI’s text-to-video generator would be a violation of the platform’s terms of service, underscoring the legal delicacy of OpenAI’s fair use argument and others.

Companies, including OpenAI, are being sued over claims they violate copyright law by training their AI on copyrighted content without providing credit or payment to the owners. Adobe seems intent on eschewing this end, like once-prolific AI competition Shutterstock and Getty Images (which also have arrangements to license model training data), and — with its IP compensation policy — position itself as a verifiably “safe » option for corporate customers.

On the subject of payment, Adobe isn’t saying how much it will cost customers to use the upcoming video creation features in Premiere. presumably, the pricing is still fragmented. But the company He made reveal that the payment system will follow the production credit system established with the early Firefly models.

For customers with a paid subscription to Adobe Creative Cloud, production credits renew monthly, in installments ranging from 25 to 1,000 per month, depending on the plan. More complex workloads (eg higher resolution generated images or multiple image generations) require more drives as a general rule.

The big question in my mind is, will Adobe’s AI video capabilities value what do they cost after all?

Firefly image production models so far have been largely was mocked as sluggish and flawed compared to Midjourney, OpenAI’s DALL-E 3, and other competing tools. The lack of a release timeframe on the video model doesn’t instill much confidence that it will avoid the same fate. Nor the fact that Adobe refused to show me live demos of adding objects, removing objects, and genetic expansion — insisting instead on a pre-recorded reel.

Perhaps to hedge its bets, Adobe says it’s in discussions with third-party vendors about integrating their video production models into Premiere, as well, into power tools like the Generative extension and others.

One of these vendors is OpenAI.

Adobe says it’s working with OpenAI on ways to bring Sora into the Premiere workflow. (An OpenAI tie-in makes sense, given the AI startup screenings in Hollywood recently; (Of course, OpenAI CTO Mira Murati will be attending the Cannes Film Festival this year.) Other early partners include Pika, a startup that builds artificial intelligence tools for creating and editing videos, and Runway, which was one of the first vendors with a video production model.

An Adobe spokesperson said the company would be open to working with others in the future.

Now, to be clear, these integrations are more of a thought experiment than a working product for now. Adobe has repeatedly emphasized to me that they are in “early preview” and “research” rather than something customers can expect to play with soon.

And that, I’d say, captures the overall tone of Adobe’s creative video press.

Adobe is clearly trying to signal with these announcements that it is thinking about creating video, even if only in a preliminary sense. You’d be foolish not to — to get caught flat-footed in the nascent AI race is to risk missing out on a valuable potential new revenue stream, assuming the economics eventually work out in Adobe’s favor. (AI models are expensive to train, run and service after all.)

But what it shows – concepts – isn’t super exciting frankly. With Sora in the wild and surely more innovations coming to the stage, the company has a lot to prove.