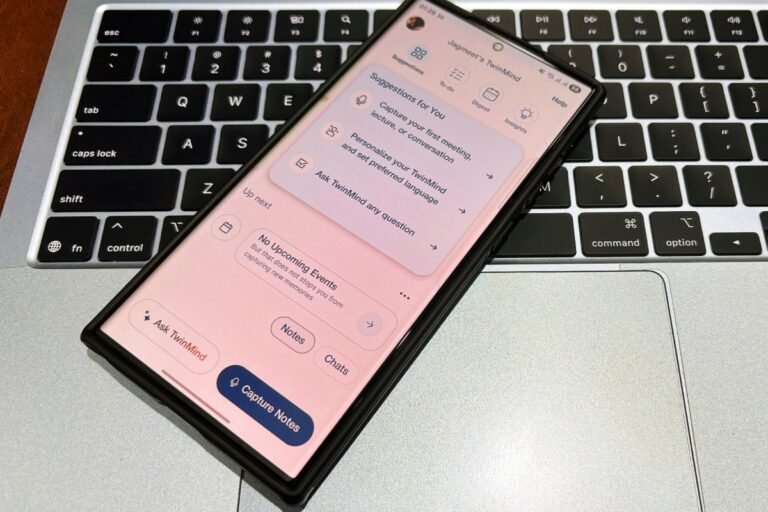

Three former Google X scientists are aiming to give you a second brain almost to the sense of sci-fi or chip-in-your-head, but through an AI-wide app that wins the frame by listening to everything you say in the background. Their starting, TwinIt has raised $ 5.7 million in seed funding and has released an Android version, along with a new AI talk model. It also has an iPhone version.

Co -founded in March 2024 by Daniel George (CEO) and former Google X X Sunny Tang and Mahi Karim (both CTOS) colleagues, Twinmind runs in the background, recording the speech of the environment (empty -user) to create a personal knowledge graph.

By conversion of spoken thoughts, meetings, lectures and conversations into structured memory, the application can create notes operating with AI, To-DOS and answers. It works offline, processes the sound in real time to transcribe the device and can capture the sound constantly for 16 to 17 hours without draining the device’s battery, the founders say. The application can also back up the user data so that the conversations can be retrieved if the device loses, although users can leave. It also supports real -time translation in more than 100 languages.

Twinmind is different from AI meeting note such as Otter, Granola and Fireflies, passively recording the sound passively in the background all day. To make this possible, the team created a low -level service on a clean Swift running on the iPhone inherently. On the contrary, many competitors use react inherent and based on cloud -based processing, which Apple restricts the background for prolonged periods, George said in an exclusive interview.

“We spent about six to seven months last year just perfecting this audio conception and arriving there to find many hacks around Apple’s Walled Garden,” he told TechCrunch.

George left Google X in 2020 and got the idea for Twinmind in 2023 when he worked in Jpmorgan as Vice President and applied the AI driver, attending back-to-back meetings every day. To save time, he created a script that occupied the sound, transcribed it on his ipad and supplied it to the chatgpt – who began to understand his works and even create a usable code. Impressed by the results, he shared it with friends and posted about it for the blind, where others showed interest, but didn’t want anything to run on their laptops. This led him to build an app that could run on a personal phone, listening quietly during meetings to gather useful frame.

In addition to the mobileTwinmind offers a Extension This collects an additional framework through the browser activity. Using Vision AI, it can sweep open open tabs and interpret content from various platforms, including email, relaxation and concept.

TechCrunch event

Francisco

|

27-29 October 2025

The start even used the expansion itself to a list of more than 850 applications that received this summer.

“We opened all the LinkedIn Profits and CVs of the 854 applicants on the browser tabs and then asked Chrome’s expansion to classify the best candidates,” George said. “He did a fantastic job – so we hired our last four practitioners.”

He noted that current AI Chatbots-Babbots, including Claude of Openai and Anthropic-cannot easily edit hundreds of documents or consume enrollments from tools such as LinkedIn or Gmail to collect information. Similarly, AI -powered browsers, such as the embarrassment and the browser company, are not able to create knowledge from offline conversations and personal meetings.

The start is currently over 30,000 users, with about 15,000 of them being active each month. By 20% to 30% of Twinmind users also use Chrome expansion, George said.

While the US is the largest base for Twinmind so far, the start is also seeing the attraction from India, Brazil, the Philippines, Ethiopia, Kenya and Europe.

Twinmind targets general public, although 50% to 60% of its users are currently professionals, about 25% are students and the remaining 20% to 25% are people who use it for personal purposes.

George told TechCrunch that his father is among the people who use Twinmind to write their autobiography.

One of the important disadvantages of AI is the ability to endanger the privacy of users. But George claimed that Twinmind does not train its models on user data and is designed to operate without sending enrollments to the cloud. Unlike many other AI points receiving applications, Twinmind does not allow users to access audio recordings later-the sound is deleted in flight-while the transcribed text is stored locally in the application, he noted.

Google X experience has helped to accelerate things

Co -founders Twinmind spent a few years workers in various projects on Google X. George in Techcrunch that he worked only in six projects, including Iyo -The team behind the AI headphones, which recently made headlines for the Openai and Jony Ive application. This experience has helped the Twinmind team move quickly from the idea to a product.

“Google X was actually the perfect place to prepare for the start of your own company,” George said. “There are about 30 to 40 projects that look like starting at all times. No one else gets to work in six newly established businesses over two or three years before starting their own-at least not in such a short time.”

Prior to joining Google, George worked for the implementation of Deep Learning to the Astrophysics gravitational wave as part of the Nobel Prize in the Ligo Group at the University of Illinois’ National Center. He had completed his PhD at AI for astrophysics in just one year – at the age of 24 – a feat that led him to participate in the 2017 Stephen Wolfram researcher as a deep learning researcher and AI.

This early relationship with Wolfram came in full circle years later-he exposed to write the first Twinmind check, marking his first investment in a start. The recent round of seeds was driven by improved businesses, with the participation of Sequoia Capital and other investors, including Wolfram. The round prices Twinmind at $ 60 million after money.

Model Twinmind Ear-3

In addition to the applications and expansion of the browser, Twinmind also introduced the Twinmind Ear-3 model, a successor to the existing EAR-2, which supports over 140 languages worldwide and has a 5.26%word error rate, the start. The new model can also recognize different speakers in a discussion and has a 3.8%speaker -shaping error rate.

The new AI model is a thin blend of various open source models, trained in a database on the internet-including podcasts, videos and movies.

“We found that the more languages you support, the better the model gets in understanding the tones and peripheral dialects, because it is ahead of a wider spectrum of speakers,” George said.

The model costs $ 0.23/ hour and will be available through an API to developers and businesses in the coming weeks.

Ear-3, unlike EAR-2, does not support a full offline experience, as it is larger in size and runs in the cloud. However, the application automatically goes to EAR-2 if the internet disappears and then moves back to Ear-3 when it is back, George said.

With the introduction of EAR-3, Twinmind now offers a Pro subscription to $ 15/month, with a larger window of environment up to 2 million brands and email support within 24 hours. However, there is still a free version with all existing features, including unlimited transcripts and speech recognition.

The start has a group of 11 members today. She plans to hire some designers to improve user experience and create a business development team to sell her API. In addition, there are plans to spend some money on acquiring new users.