OpenStack it allows enterprises to manage their own AWS-like private clouds on their premises. Even after 29 releases, it’s still one of the most active open source projects in the world, and this week, the OpenInfra Foundation that shepherds project announced the release of version 29 of OpenStack. Dubbed “Caracal”, this new release highlights new features for hosting AI and High Performance Computing (HPC) workloads.

The typical user of OpenStack is a large enterprise. This could be a retailer like Walmart or a large telecommunications company like NTT. What almost all businesses have in common right now is that they are thinking about how to get their AI models into production while keeping their data secure. For many, this means maintaining absolute control of the entire stack.

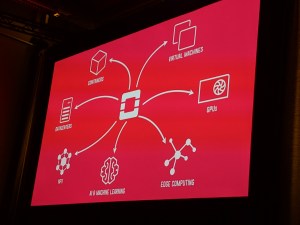

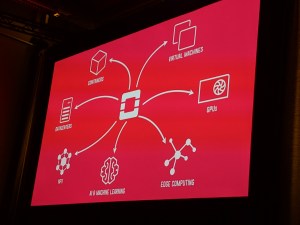

OpenInfra Foundation CEO Mark Collier

As Nvidia CEO Jensen Huang recently noted, we’re on the cusp of a multi-trillion dollar investment wave going into data center infrastructure. A lot of that is investment from the big hyperscalers, but a lot of it will also go to private deployments — and those data centers need a layer of software to manage them.

This puts OpenStack in an interesting position right now as one of the only comprehensive alternatives to VMware’s offerings, which faces its own problems as many VMware users don’t so happy regarding its sale to Broadcom. More than ever, VMware users are looking for alternatives. “With Broadcom’s acquisition of VMware and some of the licensing changes they’ve made, we’ve had a lot of companies come to us and take another look at OpenStack,” explained OpenInfra Foundation Executive Director Jonathan Bryce.

Image Credits: Frederic Lardinois/TechCrunch

OpenStack’s major growth in recent years has been driven by its adoption in the Asia-Pacific region. Indeed, as the OpenInfra Foundation announced this week, its newest Platinum member is Orchestraa South Korean cloud provider with a heavy focus on artificial intelligence. But Europe, with its strong data sovereignty laws, has also been a growth market and the UK Dawn AI supercomputer running OpenStack, for example.

“All things are aligning for a big recovery and adoption of open source for infrastructure,” OpenInfra Foundation CEO Mark Collier told TechCrunch. “That means OpenStack primarily, but also Kata Containers and some of our other projects. So it’s very exciting to see another wave of infrastructure upgrades give our community an important project to complete for many years to come.”

In practical terms, some of the new features added in this release include the ability to support vGPU live migrations to Nova, OpenStack’s core compute service. This means that users are now able to move GPU workloads from one physical server to another with minimal impact on workloads, which is what businesses are asking for as they want to be able to manage their expensive GPU hardware as efficiently as possible . Live migration for CPUs has long been a standard feature of Nova, but this is the first time it’s available for GPUs as well.

The latest release also brings a number of security improvements, including rule-based access control for more core OpenStack services, such as Ironic bare metal project as a service. This is in addition to network updates to better support HPC workloads and a number of other updates. You can find the full release notes here.

BURBANK, CA – JULY 10: A general view of the atmosphere at the 7-Eleven 88th Birthday Celebration at 7-Eleven on July 10, 2015 in Burbank, California. (Photo by Chris Weeks/Getty Images for 7-Eleven)

This update is also the first since OpenStack moved to the “Skip Layer Upgrade Release Process” (SLURP) a year ago. The OpenStack project churns out a new release every six months, but that’s too fast for most businesses — and in the early days of the project, most users would describe the upgrade process as “painful” (or worse).

Today, upgrades are much easier and the project is also much more stable. The SLURP cadence introduces something akin to a long-term release release where, on a yearly basis, every other release is an easy-to-upgrade SLURP release, even as teams continue to produce major updates for the original six-month cycle for those they want a faster pace.

Over the years, OpenStack has gone through ups and downs in terms of perception. But now it’s a mature system and supported by a viable ecosystem—something that wasn’t necessarily the case at the height of its first hype cycle ten years ago. In recent years, it has found great success in the world of telecommunications, which has allowed it to go through this maturation phase, and today, it may just be in the right place and time to take advantage of the AI boom.