Firefly, Adobe’s family of AI models, doesn’t have the best reputation among creatives.

In particular, the Firefly image generation model has been mocked as overwhelming and defective compared to Midjourney, OpenAI’s DALL-E 3 and other competitors, it tends to distort edges and landscapes and lose nuances in messages. But Adobe is trying to right the ship with its third-generation model, Firefly Image 3, which will be released this week during the company’s Max London conference.

The model, now available in Photoshop (beta) and the Adobe Firefly web app, produces more “realistic” images than its predecessor (Figure 2) and its predecessor (Figure 1) thanks to the ability to understand larger, more complex prompts and scenes. as well as improved lighting and text creation capabilities. It should more accurately render things like typography, iconography, raster images and line art, Adobe says, and is “significantly” more capable of depicting dense crowds and people with “detailed features” and “a variety of moods and expressions”.

For what it’s worth, in my short unscientific test, Figure 3 does seems to be a step up from image 2.

I have not been able to test Figure 3 myself. But Adobe PR sent some outputs and prompts from the model, and I was able to run those same prompts through Figure 2 on the web to get samples to compare the outputs of Figure 3. (Note that the outputs of Figure 3 could have been selected.)

Notice the lighting in this headshot from Figure 3 compared to the one below it, from Figure 2:

From image 3. Prompt: “Studio portrait of young woman.”

Same message as above, from Figure 2.

The Image 3 output looks more detailed and vibrant to my eyes, with shading and contrast largely absent from the Image 2 sample.

Here is a set of images showing the understanding of the Figure 3 scene in playback:

From image 3. Prompt: “An artist in her studio sits at her desk and looks pensively at many paintings and ethereals.”

Same prompt as above. From image 2.

Note that the Figure 2 sample is quite basic compared to the output from Figure 3 in terms of level of detail — and overall expressiveness. There is awkwardness with the subject in the sample shirt in Figure 3 (around the waist area), but the pose is more complicated than that of the subject in Figure 2. (And the clothes in Figure 2 are also a bit off.)

Some of the improvements in Figure 3 can undoubtedly be traced to a larger and more diverse training dataset.

Like Figure 2 and Figure 1, Figure 3 is trained on uploads to Adobe Stock, Adobe’s royalty-free media library, along with licensed content and public domain content for which copyright has expired. Adobe Stock is constantly growing, and consequently so is the available training dataset.

In an effort to fend off lawsuits and position itself as a more “ethical” alternative to productive AI vendors that train on images indiscriminately (eg OpenAI, Midjourney), Adobe has a program to pay Adobe Stock contributors to training data set. (We’ll note that the terms of the program are rather opaque, however.) Controversially, Adobe also trains Firefly models on AI-generated images, which some consider a form of data laundering.

Recent Bloomberg reference Revealed AI-generated images in Adobe Stock are not excluded from the training data of the Firefly image generation models, a worrying prospect considering that these images may contain copyrighted material. Adobe has defended the practice, arguing that AI-generated images are only a small part of its training data and go through a moderation process to ensure they don’t depict trademarks or recognizable characters or artist names.

Of course, neither different, more “ethical” sourced training data nor content filters and other safeguards guarantee a perfect, flawless experience — watch users create people flip the bird with Figure 2. The real test of Figure 3 will come once the community gets their hands on it.

New possibilities with artificial intelligence

Image 3 brings many new features to Photoshop beyond improved text-to-image conversion.

A new “style engine” in Figure 3, along with a new auto-stylization toggle, allows the modeler to create a wider variety of colors, backgrounds, and subject poses. They feed into the Reference Image, an option that allows users to set the model to an image whose colors or tone they want to align with their future content.

Three new creation tools — Create Background, Create Similars, and Enhance Detail — leverage image 3 to perform precision edits on images. The (self-explanatory) Generate Background replaces a background with a derivative that blends with the existing image, while Generate Similar offers variations on a selected part of a photo (a person or object, for example). As for Detail Enhancement, it “optimizes” images to improve sharpness and clarity.

If these features sound familiar, that’s because they’ve been in beta on the Firefly web app for at least a month (and on Midjourney for much longer than that). This marks their debut in Photoshop — in beta.

Speaking of the web app, Adobe doesn’t neglect this alternative route to its AI tools.

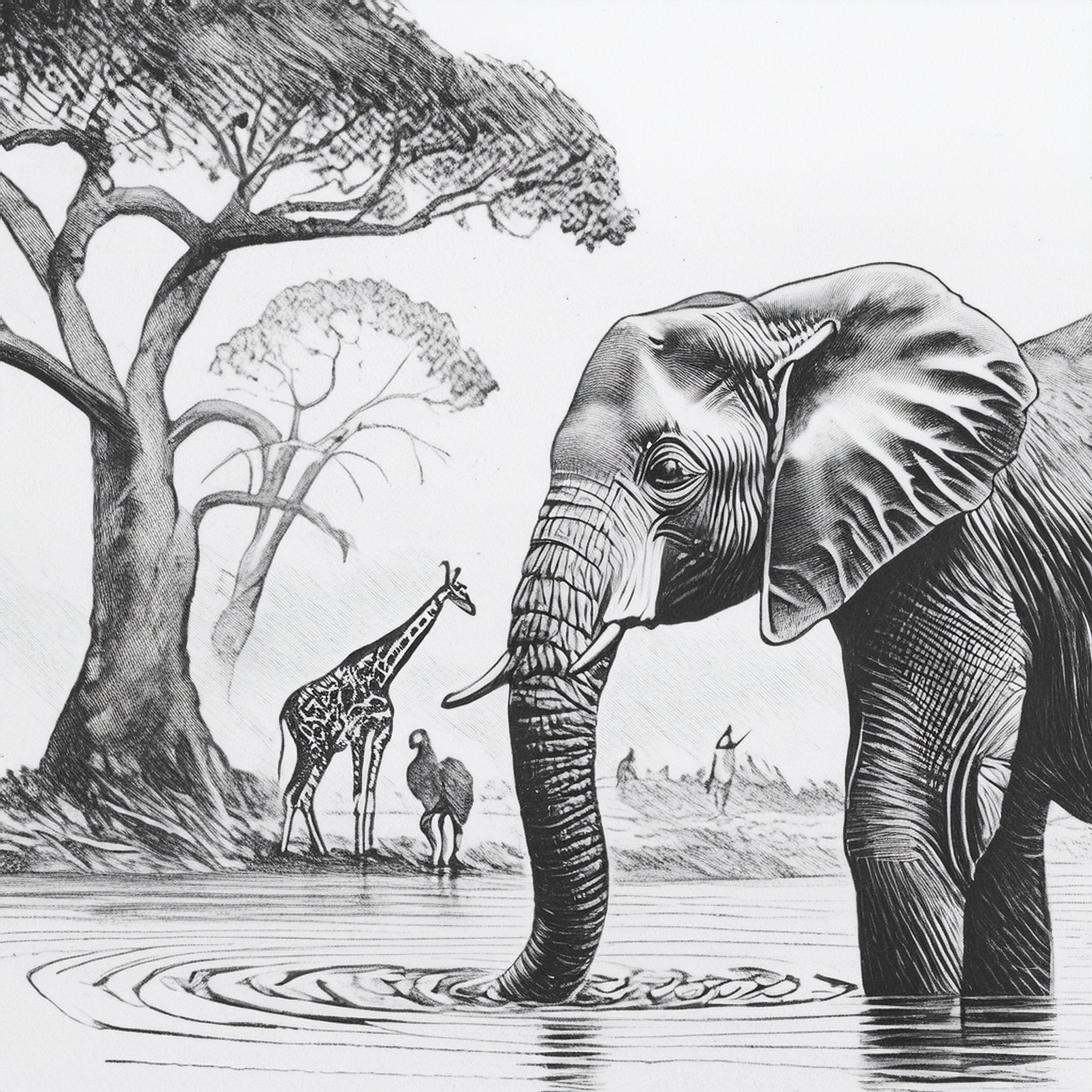

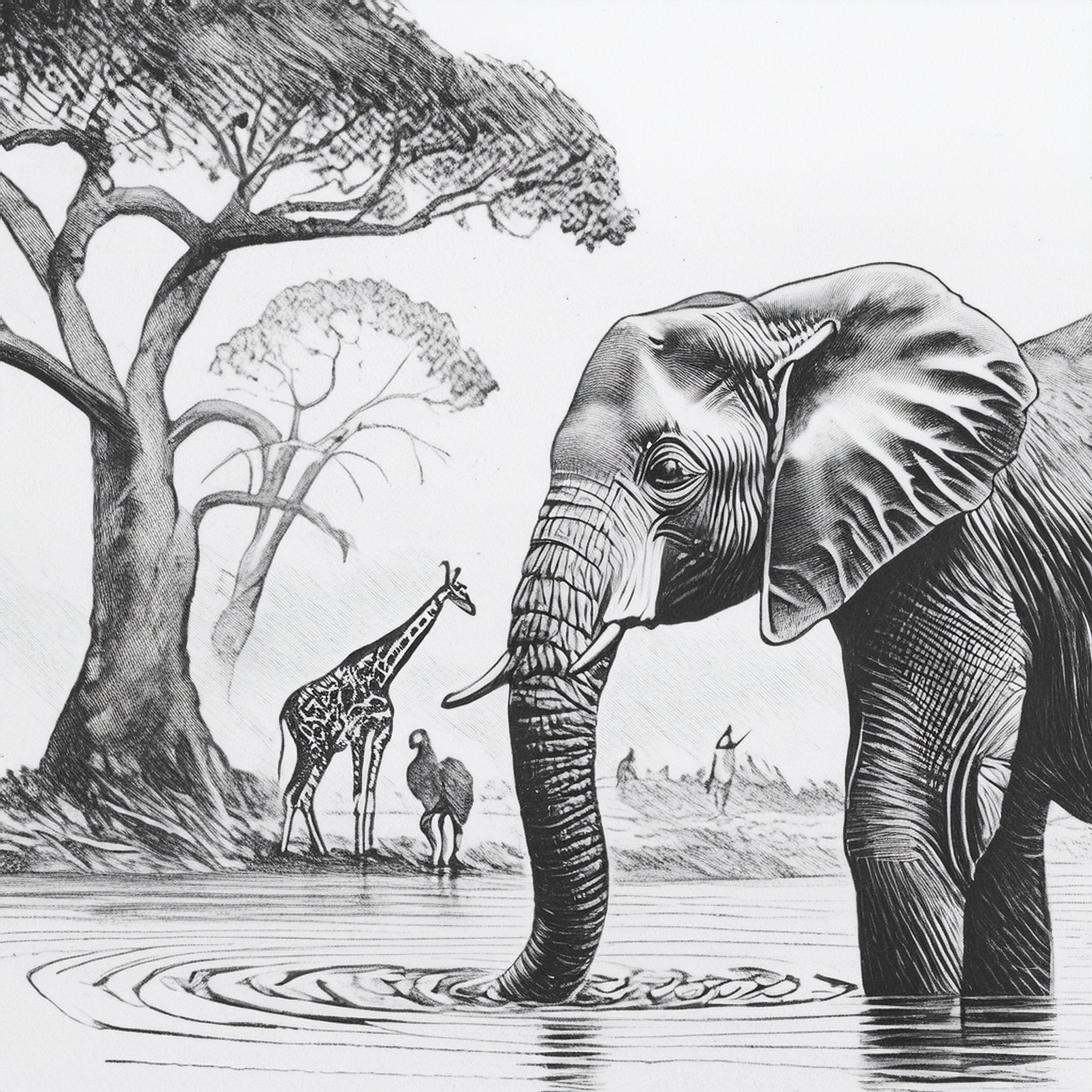

To coincide with the release of Image 3, the Firefly web app is getting Structure Reference and Style Reference, which Adobe touts as new ways to “promote creative control.” (Both were announced in March, but are now becoming widely available.) With Structure Reference, users can create new images that match the “structure” of a reference image — say, a view of a race car. Style Reference is essentially style transfer by another name, preserving the content of an image (eg elephants on African safari) while mimicking the style (eg pencil sketch) of a target image.

Here’s the structure reference in action:

Original image.

Transformed with Structure Reference.

Reference and style:

Original image.

Transformed with Style Reference.

I asked Adobe if, with all the upgrades, the pricing for Firefly image production would change. Currently, the cheapest Firefly premium plan is $4.99 per month — undercutting competition like Midjourney ($10 per month) and OpenAI (which brings DALL-E 3 behind a $20 per month ChatGPT Plus subscription month).

Adobe said its current tiers will remain in place for now, along with the credit generation system. It also said that its indemnification policy, under which Adobe will pay copyright claims related to works created on Firefly, will not change, nor will its approach to watermarking AI-generated content. Content credentials — metadata to identify AI-generated media — will continue to be automatically attached to all generations of Firefly images on the web and in Photoshop, whether created from scratch or partially edited using authoring capabilities.