Google DeepMind shared on Thursday a research preview of SIMA 2, the next-generation general AI agent that incorporates the language and reasoning powers of Gemini, Google’s big language model, to go beyond simply following directions to understanding and interacting with its environment.

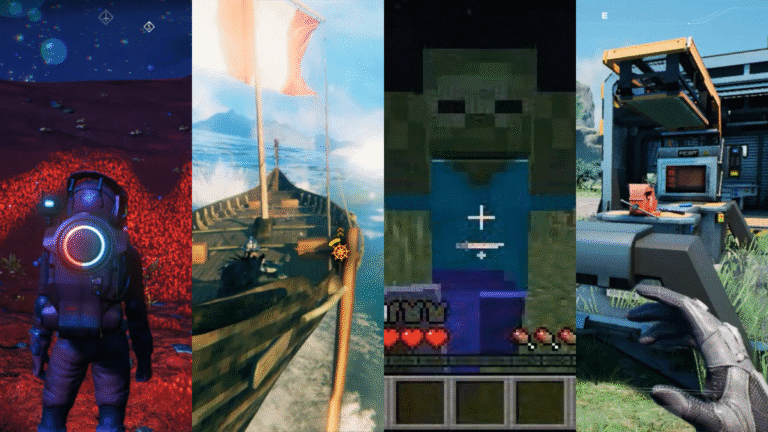

Like many of DeepMind’s projects, including AlphaFold, the first version of SIMA was trained on hundreds of hours of video game data to learn how to play many 3D games like a human, even some games it wasn’t trained to play. SIMA 1, unveiled in March 2024, could follow basic instructions in a wide range of virtual environments, but had only a 31% success rate for completing complex tasks, compared to 71% for humans.

“SIMA 2 is a step change and an improvement over SIMA 1,” said Joe Marino, a senior researcher at DeepMind, in a press briefing. “It’s a more general agent. It can complete complex tasks in previously unseen environments. And it’s a self-improving agent. So it can actually improve itself based on its own experience, which is a step toward more general-purpose robots and AGI systems in general.”

SIMA 2 is powered by the Gemini 2.5 flash-lite model, and AGI refers to artificial general intelligence, which DeepMind defines as a system capable of a wide range of intellectual tasks with the ability to learn new skills and generalize knowledge across different domains.

Cooperation with so-called “embedded agents” is critical to generalized intelligence, DeepMind researchers say. Marino explained that an embedded agent interacts with a physical or virtual world through a body — observing inputs and taking actions like a robot or a human — while a non-embedded agent might interact with your calendar, take notes or run code.

Jane Wang, a senior staff researcher at DeepMind with a background in neuroscience, told TechCrunch that SIMA 2 goes beyond gaming.

“We’re asking it to really understand what’s going on, understand what the user is asking it to do, and then be able to respond in a common-sense way that’s actually quite difficult,” Wang said.

Techcrunch event

San Francisco

|

13-15 October 2026

By integrating Gemini, SIMA 2 doubled the performance of its predecessor, combining Gemini’s advanced language and reasoning abilities with embedded skills developed through training.

Marino introduced SIMA 2 in “No Man’s Sky,” where the agent described its environment — a rocky planet surface — and determined its next steps by recognizing and interacting with a distress beacon. SIMA 2 also uses Gemini to reason internally. In another game, when asked to walk to the house that is the color of a ripe tomato, the agent demonstrated its thought—ripe tomatoes are red, so I should go to the red house—then found it and approached it.

Being Gemini-powered also means that SIMA 2 follows emoji-based instructions: “You give it instructions 🪓🌲 and it will chop down a tree,” Marino said.

Marino also showed how SIMA 2 can navigate new photorealistic worlds generated by Genie, DeepMind’s global model, correctly recognizing and interacting with objects such as benches, trees and butterflies.

Gemini also allows for self-improvement without much human input, Marino added. Where SIMA 1 was trained entirely on human play, SIMA 2 uses it as a base to provide a robust initial model. When the team places the agent in a new environment, it asks another Gemini model to generate new tasks and a separate reward model to rate the agent’s efforts. Using these generated experiences as training data, the agent learns from its own mistakes and gradually performs better, essentially teaching new behaviors through trial and error as a human would, guided by AI-based feedback instead of humans.

DeepMind sees SIMA 2 as a step toward unlocking more general purpose robots.

“If we think about what a system needs to do to perform real-world tasks, like a robot, I think there are two components to it,” Frederic Besse, a senior research engineer at DeepMind, said during a press briefing. “First, there’s a high-level understanding of the real world and what needs to be done, as well as some logic.”

If you ask a humanoid robot in your home to go check how many cans of beans you have in the cupboard, the system has to understand all the different concepts – what the beans are, what the cupboard is – and navigate to that location. Besse says SIMA 2 touches more on this high-level behavior than on lower-level actions, which he says controls things like physical joints and wheels.

The team declined to share a specific timeline for implementing SIMA 2 in physical robotics systems. Besse told TechCrunch that DeepMind’s recently was revealed Robotics foundation models — which can also reason about the physical world and create multi-step plans to complete a mission — were trained separately and separately from SIMA.

While there’s also no timetable for releasing more than a preview of SIMA 2, Wang told TechCrunch that the goal is to show the world what DeepMind is working on and see what kinds of collaborations and potential uses are possible.