on Saturday, Triple gangers CEO Oleksandr Tomchuk was notified that his company’s e-commerce site was down. It appeared to be some kind of distributed denial of service attack.

He soon discovered that the culprit was a bot from OpenAI that was relentlessly trying to scrape his entire, massive website.

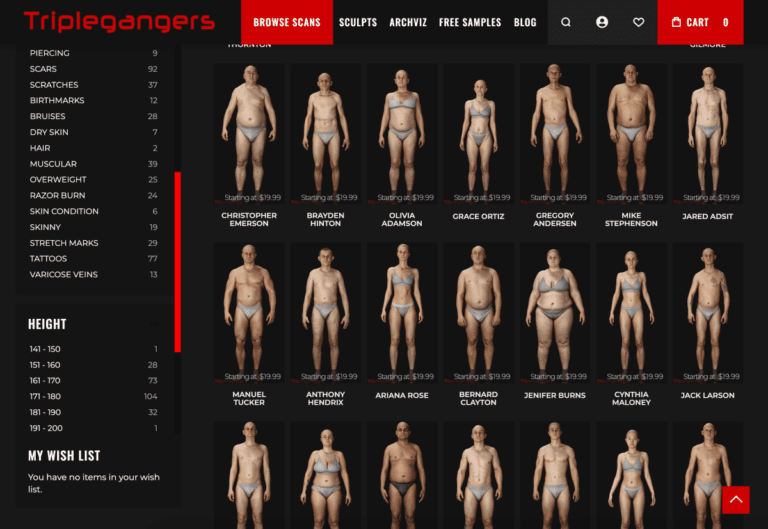

“We have over 65,000 products, every product has a page,” Tomchuk told TechCrunch. “Each page has at least three photos.”

OpenAI was sending “tens of thousands” of server requests trying to download all of them, hundreds of thousands of photos, along with their detailed descriptions.

“OpenAI used 600 IPs to scrape data and we’re still analyzing logs from last week, maybe a lot more,” he said of the IP addresses the bot used to try to consume his site.

“Their crawlers were crashing our site,” he said “Basically it was a DDoS attack.”

The Triplegangers website is her business. The seven-employee company has spent more than a decade assembling what it calls the largest database of “human digital doubles” on the web, files of 3D images scanned from real human models.

It sells its 3D object files, as well as photos – from hands to hair, skin and body – to 3D artists, video game makers, anyone who needs to digitally recreate authentic human features.

Tomchuk’s team, based in Ukraine but licensed to the US out of Tampa, Florida, has one terms of service page on his website that prohibits bots from taking his images without permission. But that alone did nothing. Sites must use a properly formatted robot.txt file with tags that specifically tell OpenAI’s bot, GPTBot, to leave the site alone. (OpenAI also has a couple of other bots, ChatGPT-User and OAI-SearchBot, which have their own tags, according to his info page on his trackers.)

Robot.txt, otherwise known as the Robot Exclusion Protocol, was created to tell search engine websites what not to crawl as they index the web. OpenAI says on its information page that it honors such files when configured with its own set of do-not-crawl tags, though it also warns that it can take up to 24 hours for its bots to recognize an updated robot.txt file.

As Tomchuk countered, if a site doesn’t use robot.txt correctly, OpenAI and others take that to mean they can touch to their heart’s content. It is not an opt-in system.

To add insult to injury, not only was Triplegangers taken offline by the OpenAI bot during US business hours, but Tomchuk is expecting a skyrocketing AWS bill thanks to all the bot’s CPU and download activity.

Robot.txt is also not bug-proof. AI companies voluntarily comply with this. Another AI startup, Perplexity, was infamously hit last summer by a Wired investigation when some evidence suggested that Perplexity was not honoring it.

I can’t know for sure what was taken

By Wednesday, after days of returning the OpenAI bot, Triplegangers had a properly configured robot.txt file, as well as a Cloudflare account he had set up to block his GPTBot and several other bots he discovered, such as Barkrowler (a SEO crawler) and Bytespider (TokTok crawler). Tomchuk also hopes to have blocked crawlers from other AI modeling companies. As of Thursday morning, the site was not down, he said.

However, Tomchuk still has no reasonable way to know exactly what OpenAI took or to remove this material. He found no way to contact OpenAI and ask. OpenAI did not respond to TechCrunch’s request for comment. And OpenAI has so far failed to deliver on its long-promised opt-out tool, as TechCrunch recently reported.

This is a particularly difficult issue for Triplegangers. “We’re in a business where rights are kind of a serious issue because we’re scanning real people,” he said. With laws like the European GDPR, “they can’t just take a picture of anyone on the web and use it.”

The Triplegangers site was also a particularly tasty find for AI scouts. Multibillion-dollar startups like Scale AI have been created where people painstakingly label images to train AI. The Triplegangers website contains photos with detailed tags: ethnicity, age, tattoos vs. scars, all body types, and so on.

The irony is that the greed of the OpenAI bot is what alerted the Triplegangers to how exposed it was. If he had scraped more gently, Tomchuk would never have known, he said.

“It’s scary because there seems to be a loophole that these companies use to crawl data by saying ‘you can opt out if you update your robot.txt with our tags,'” says Tomchuk, but that puts the onus on the business owner to figure out how block them.

He wants other small online businesses to know that the only way to find out if an AI bot is taking a website’s copyrighted material is to actively look. He is certainly not alone in being terrified of them. Owners of other sites recently said Business Insider how OpenAI bots crashed their websites and paid their AWS bills.

The problem got bigger in 2024. New research from digital advertising company DoubleVerify found that AI detectors and scrapers caused an 86% increase in “general invalid traffic” in 2024 — that is, traffic that doesn’t come from a real user.

Still, “most websites remain unaware that they’ve been hacked by these bots,” warns Tomchuk. “Now we have to monitor log activity daily to detect these bots.”

When you think about it, the whole model works a bit like a mob shakedown: The AI bots will take whatever they want unless you have protection.

“They should be asking for permission, not just scraping data,” says Tomchuk.

TechCrunch has a newsletter focusing on AI! Sign up here to get it in your inbox every Wednesday.