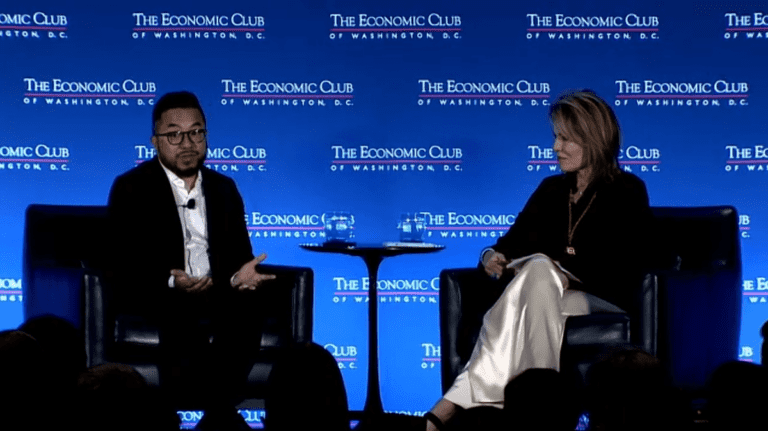

Garry Tan, chairman and CEO of Y Combinator, told a crowd at The Economic Club in Washington this week that “regulation is probably necessary” for artificial intelligence.

Tan spoke with Teresa Carlson, a General Catalyst board member as part of a one-on-one interview, where she discussed everything from how to get into Y Combinator to artificial intelligence, noting that “there’s no better time to to work in technology from the right now.”

Tan said he was “overall supportive” of the National Institute of Standards and Technology’s (NIST) effort to create a GenAI risk mitigation framework, and said that “large parts of EO from the Biden administration is probably on the right track.”

The NIST framework suggests things such as defining that GenAI should comply with existing laws governing things like data privacy and copyright; disclosing GenAI use to end users; establishing regulations that prohibit GenAI from creating child sexual abuse material, and so on. Biden’s executive order covers a wide range of clauses, from requiring AI companies to share security data with the government to ensuring that small developers have fair access.

But Tan, like many Valley VCs, was wary of other regulatory efforts. He called AI-related bills moving through the California and San Francisco legislatures “very troubling.”

One California bill causing an uproar is one introduced by state Sen. Scott Wiener that would allow the attorney general to sue artificial intelligence companies if their products are harmful, reports Politico.

“The big debate across the board in terms of policy right now is what does a good version of this really look like?” Tan said. “We can look at people like Ian Hogarth, in the UK, to be careful. They are also aware of this idea of concentration of power. At the same time, they’re trying to understand how we support innovation while at the same time mitigating the worst possible harms.”

Hogarth is a former YC entrepreneur and AI expert who was recruited from the UK to an AI Modeling Working Group.

“What scares me is that if we try to address a science fiction concern that doesn’t exist,” Tan said.

As for how YC handles responsibility, Tan said that if the organization doesn’t agree with a startup’s mission or what that product would do for society, “YC just doesn’t fund it.” He noted that there are several times he read about a company in the media that had applied to YC.

“We go back and look at the interview notes, and it’s like, we don’t think this is good for society. And luckily, we didn’t fund it,” he said.

AI leaders continue to mess up

Tan’s guideline still leaves room for Y Combinator to create many AI startups as cohort scores. As my colleague Kyle Wiggers reported, the Winter 2024 cohort had 86 AI startups, nearly double the number of the Winter 2023 batch and nearly triple the number of Winter 2021, according to YC’s official startup directory.

And recent news events have people wondering whether they can trust those who sell AI products to define responsible AI. Last week, TechCrunch reported that OpenAI was being released from its AI liability group.

Then the debacle involved the company using a voice that sounded like actress Scarlet Johansson when introducing its new GPT-4o model. Turns out she was asked to use her voice and she turned them down. OpenAI has since removed Sky’s voice, though it denied it was based on Johansson. This, and questions about OpenAI’s ability to recoup equity that has been secured, were among several items that led people to open-ended questions The Misgivings of Sam Altman.

Meanwhile, Meta made its own AI news when it announced the creation of an AI advisory board that had only white men, effectively leaving out women and people of color, many of whom were instrumental in creating and innovation of this. industry.

Tan was not cited in any of these cases. Like most Silicon Valley VCs, what he sees are opportunities for new, huge, profitable businesses.

“We like to think of startups as a maze of ideas,” Tan said. “When a new technology comes out, like big language models, the whole labyrinth of the idea gets shaken up. ChatGPT itself was probably one of the most quickly successful consumer products launched in recent memory. And that’s good news for founders.”

The artificial intelligence of the future

Tan also said that San Francisco is at the epicenter of the AI movement. For example, that’s where Anthropic, started by YC Alums, and OpenAI, which was a YC spinout, started.

Tan also joked that he wasn’t going to follow in Altman’s footsteps, noting that Altman “had my job several years ago, so I had no plans to start an AI lab.”

One of YC’s other success stories is legal tech startup Casetext, which it sold to Thomson Reuters for $600 million in 2023. Tan believed Casetext was one of the first companies in the world to access genetic AI and it was then one of the first forays into genetic artificial intelligence.

Looking at the future of artificial intelligence, Tan said “obviously, we have to be smart about this technology” as it relates to risks around bioterrorism and cyber attacks. At the same time, he said there should be “a much more measured approach”.

He also hypothesizes that there isn’t likely to be a “winner take all” model, but rather an “incredible garden of freedom of choice for consumers and founders who will be able to create something that touches a billion people.”

At least, that’s what he wants to see happen. This would be in his and YC’s best interest – many successful startups return a lot of cash to investors. So what scares Tan the most isn’t runamok’s bad AIs, but the lack of AIs to choose from.

“We might actually be in this other really monopolistic situation where there’s a lot of concentration in just a few models. Then you talk about rent extraction and you have a world I don’t want to live in.”