Your phone’s camera is both software and hardware, and Glass hopes to improve both. But while its wild anamorphic lens is on the market, the company (with $9.3 million in new money) has released an AI camera upgrade that it says significantly improves image quality — without any weird AI upgrade artifacts.

GlassAI is a purely software approach to enhancing images, what they call a neural image signal processor (ISP). ISPs are basically the ones that take the raw sensor output—often flat, noisy, and distorted—and turn it into the sharp, colorful images we see.

ISP is also increasingly sophisticated, as phone makers like Apple and Google like to show, by composing multiple exposures, quickly detecting and sharpening faces, adjusting for tiny movements, and more. And while many include some form of machine learning or artificial intelligence, they need to be careful: Using AI to create details can cause hallucinations or artifacts as the system tries to create visual information where none exists. Such “super-resolution” models are useful in their place, but must be watched carefully.

Glass builds a complete camera system based on an unusual lozenge-shaped front element and an ISP to back it up. And while the former is working on its presence in the market with some upcoming devices, the latter is, it turns out, a product worth selling on its own.

“Our restoration networks correct optical aberrations and sensor issues while effectively removing noise and outperforming traditional image signal processing pipelines in fine texture recovery,” explained CTO and co-founder Tom Bishop in their news release.

Concept animation showing the process of going from RAW to a glass processed image. Image Credits: Glass

The word “recovery” is key, because the details are not simply created but are exported from raw images. Depending on how your camera stack already works, you may know that certain artifacts or corners or noise patterns can be reliably resolved or even exploited. Learning how to turn these implied details into real ones — or combine details from multiple exposures — is a big part of any computational photography stack. Co-founder and CEO Ziv Attar says their neural ISP is better than anyone else in the industry.

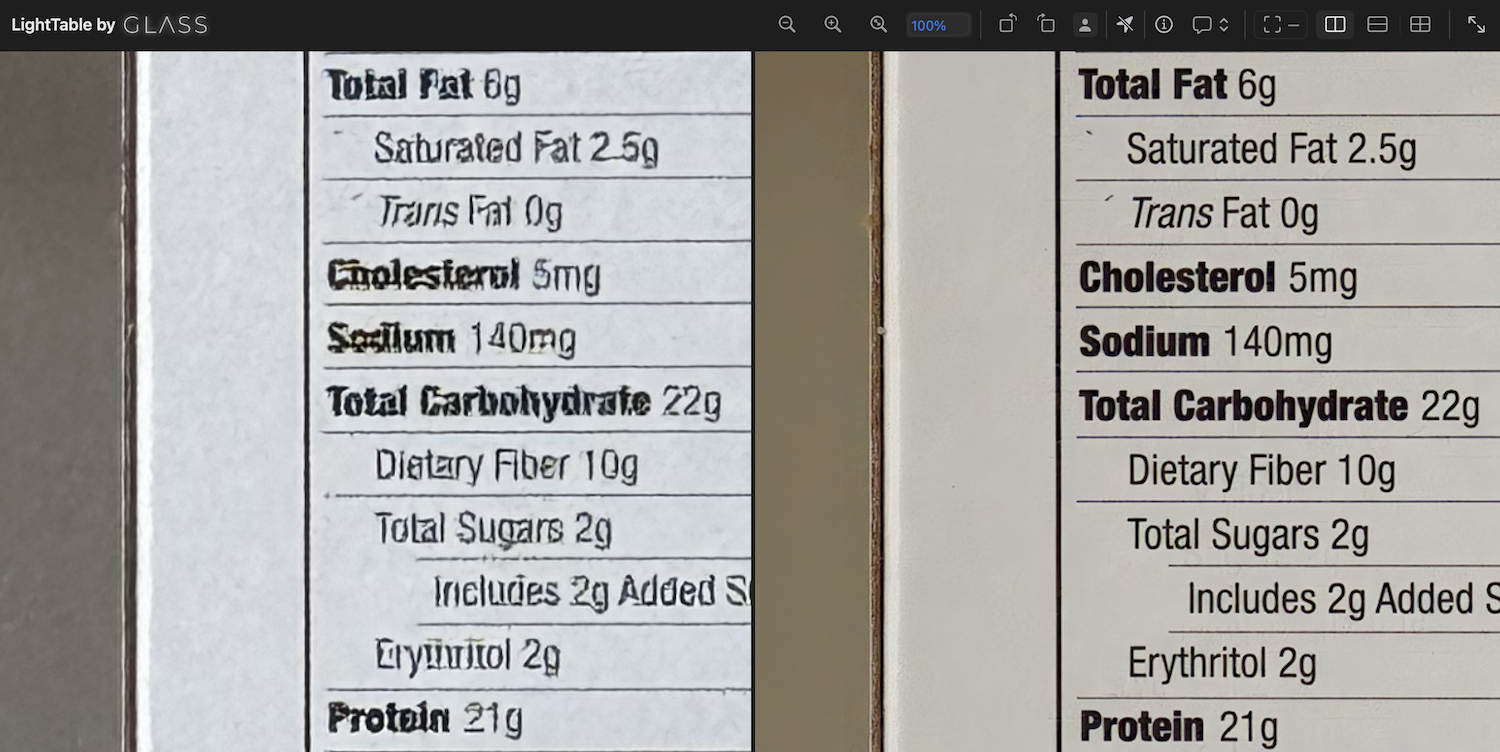

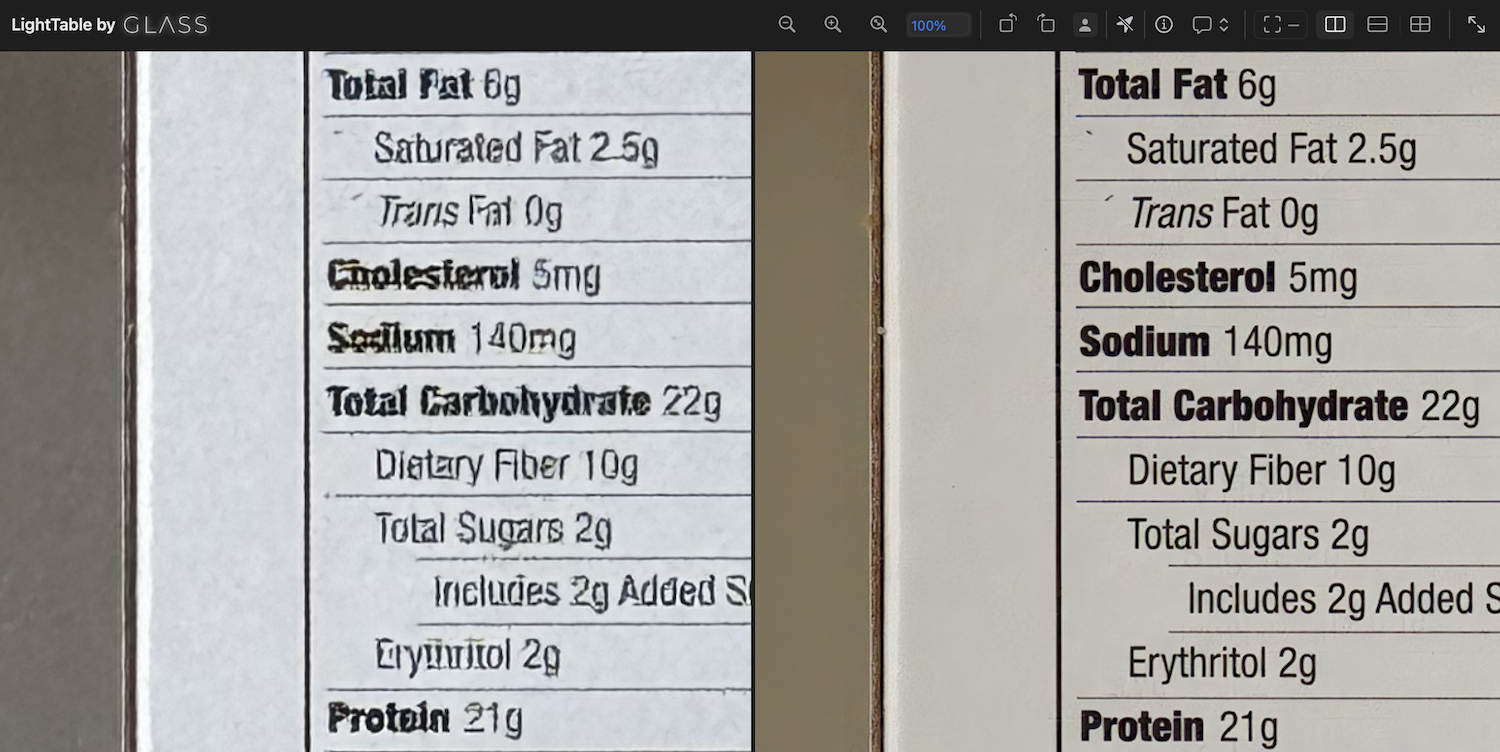

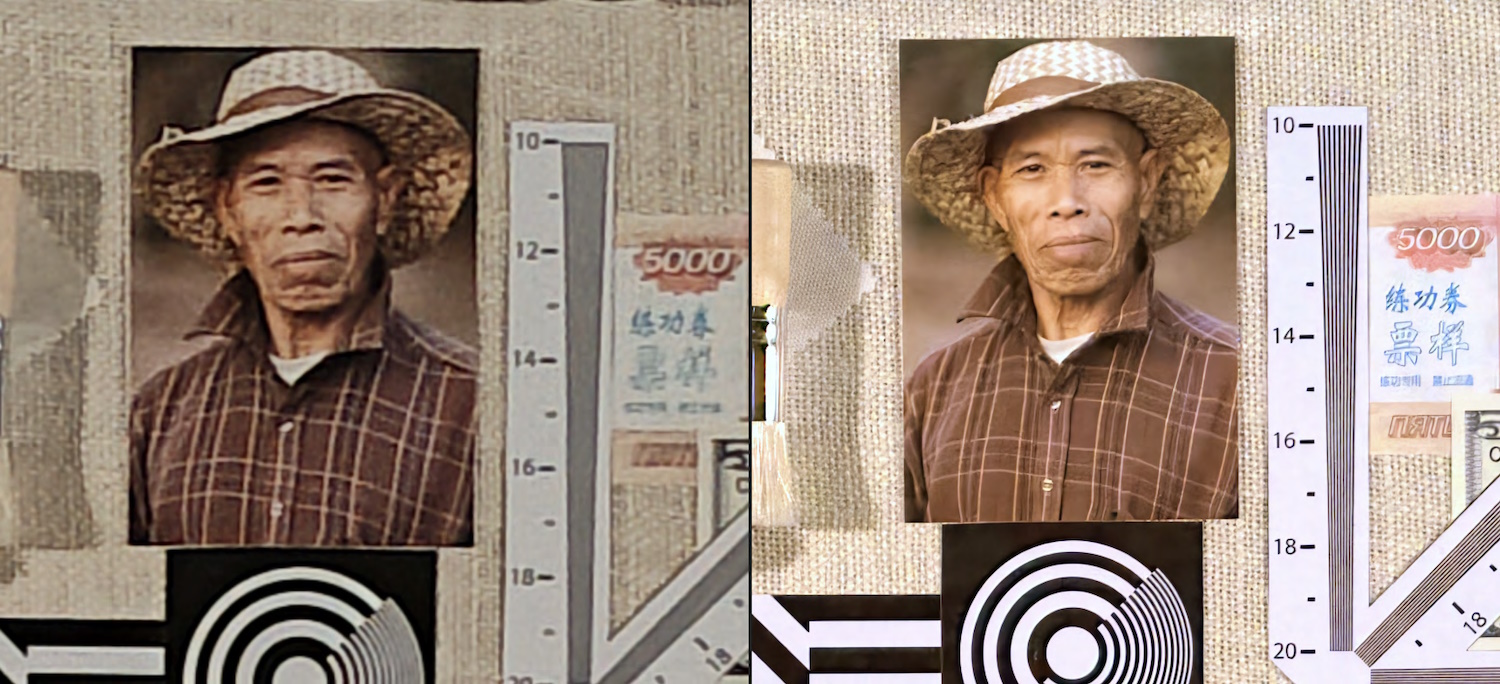

Even Apple, he pointed out, doesn’t have a full neural imaging stack, only uses it in specific cases where it’s needed, and their results (in his opinion) aren’t great. He provided an example of Apple’s nervous ISP misreading text, with Glass doing much better:

The photo provided by Ziv Attar shows an iPhone 15 Pro Max with 5x zoom and the Glass-edited version of the phone’s RAW images. Image Credits: Ziv Attar

“I think it’s fair to assume that if Apple hasn’t been able to get decent results, it’s hard to solve the problem,” he said. “It’s less about the actual stack and more about how you train. We have a very unique way of doing it that was developed for anamorphic lens systems and is effective on any camera. Basically, we have training labs that include robotics systems and optical calibration systems that manage to train a network to characterize lens aberration in a very comprehensive way and essentially reverse any optical distortion.”

As an example, he provided a case study where they had DXO evaluate the camera on a Moto Edge 40 and then do it again with GlassAI installed. Glass-processed images are all clearly improved, sometimes dramatically so.

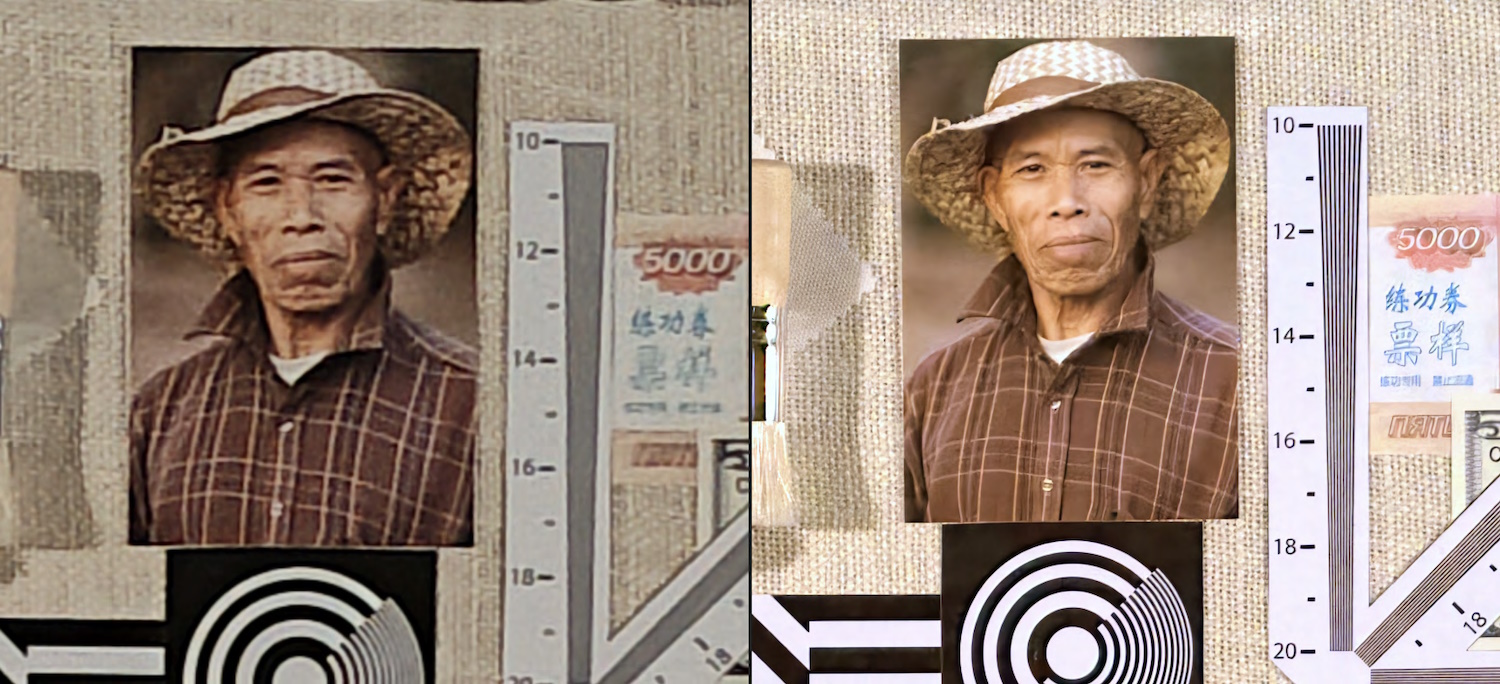

Image Credits: Glass / DXO

At low light levels, the built-in ISP tries to differentiate the fine lines, textures and details of the face in its night mode. Using GlassAI, it’s as sharp as with half the exposure time.

You can see the pixels in some test photos provided by Glass alternating between primes and finals.

Companies that assemble phones and cameras have to spend a lot of time tuning the ISP so that the sensor, lens and other pieces work together properly to make the best possible image. It seems, however, that Glass’s one-size-fits-all process can do a better job in a fraction of the time.

“The time it takes us to train shippable software from the time we get our hands on a new type of device … varies from a few hours to a few days. For reference, phone manufacturers spend months fine-tuning picture quality, with huge teams. Our process is fully automated so we can support multiple devices in a matter of days,” Attar said.

Neural ISP is also end-to-end, meaning that in this context it goes straight from the RAW sensor to the final image without requiring additional processes such as de-noise, sharpening and so on.

Left: RAW, right: Processed on glass. Image Credits: Glass

When I asked, Attar was careful to differentiate his work from hyper-resolution AI services, which take a complete picture and scale it up. These are often not “retrieving” details so much as inventing them where appropriate, a process that can sometimes produce undesirable results. Although Glass uses artificial intelligence, it is not productive like many image-related AIs.

Today marks the product’s widespread availability, presumably after a long period of testing with partners. If you’re building an Android phone, it might be a good idea to at least give it a try.

On the hardware side, the phone with the odd lozenge-shaped anamorphic camera will have to wait until this manufacturer is ready to release it to the public.

While Glass is developing its technology and testing customers, it’s also busy fearing funding. The company just closed an “extended seed” of $9.3 million, which I put in quotes because the seed round was in 2021. The new funding was led by GV, with participation from Future Ventures, Abstract Ventures and LDV Capital.