Keeping up with an industry as fast-paced as artificial intelligence is a tall order. So, until an AI can do it for you, here’s a helpful roundup of recent stories in the world of machine learning, along with notable research and experiments we didn’t cover on their own.

This week in AI, I’d like to shine a spotlight on tagging and annotation startups — startups like Scale AI, which is According to reports in talks to raise new capital at a valuation of $13 billion. Tagging and annotation platforms may not be paying attention to impressive new AI models like OpenAI’s Sora. But they are necessary. Without them, modern AI models would arguably not exist.

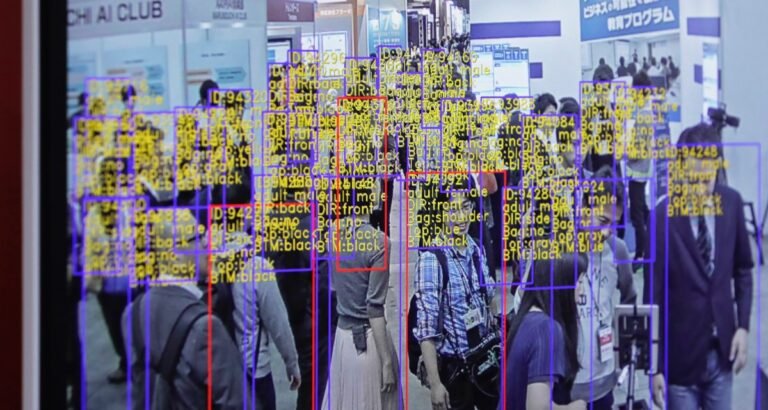

The data on which multiple models are trained must be labeled. Why; Labels or tags help models understand and interpret data during the training process. For example, labels for training an image recognition model can be in the form of labels around objects, “bounding boxes” or captions that refer to each person, place, or object depicted in an image.

The accuracy and quality of labels significantly affect the performance — and reliability — of trained models. And annotation is a huge undertaking, requiring thousands to millions of tags for the largest and most sophisticated datasets used.

So you’d think that data annotators would be treated well, paid living wages, and have the same benefits that the engineers who build the models enjoy. Often, though, the opposite is true – a product of the brutal working conditions that support many startups with commentary and labels.

Companies with billions in the bank, like OpenAI, have relied Commentators in third world countries paid only a few dollars an hour. Some of these commenters are exposed to highly disturbing content, such as graphic images, but are not given permission (as they are usually contractors) or access to mental health resources.

An excellent one piece in NY Mag pulls back the curtains specifically on Scale AI, which is recruiting commentators in countries as far away as Nairobi and Kenya. Some of the jobs at Scale AI require labelers to work multiple eight-hour days — with no breaks — and pay as little as $10. And these workers are subject to the vagaries of the platform. Commenters sometimes go long stretches without getting work, or get fired up by Scale AI — as happened with contractors in Thailand, Vietnam, Poland, and Pakistan recently.

Some commenting and tagging platforms claim to provide “fair trade” work. In fact, they have made it a central part of their branding. But as Kate Kaye of MIT Tech Review notesthere are no regulations, only weak industry standards for what constitutes ethical labeling work — and the companies’ own definitions vary widely.

So what should we do? Aside from a huge technological breakthrough, the need to annotate and label data for AI training isn’t going away. We can hope that the platforms are self-regulating, but the most realistic solution seems to be policy making. That in itself is a difficult prospect — but it’s the best chance we have, I’d say, of changing things for the better. Or at least start to.

Here are some other notable AI stories from the past few days:

-

- OpenAI creates a voice cloner: OpenAI is previewing a new AI tool it developed, Voice Engine, which lets users clone a voice from a 15-second recording of someone speaking. But the company is choosing not to release it widely (yet), citing risks of misuse and abuse.

- Amazon is doubling down on Anthropic: Amazon has invested an additional $2.75 billion in growing AI powerhouse Anthropic, following the option it left open last September.

- Google.org launches an accelerator: Google.org, the philanthropic arm of Google, is launching a new $20 million six-month program to help fund nonprofits developing technology that harnesses genetic artificial intelligence.

- A new architecture model: AI startup AI21 Labs has released a generative AI model, Jamba, that uses a new, novel model architecture — state space models, or SSMs — to improve efficiency.

- Databricks Launches DBRX: In other new models, Databricks this week launched DBRX, an AI production model similar to OpenAI’s GPT series and Google’s Gemini. The company claims to achieve cutting-edge results on a number of popular AI benchmarks, including several measurement reasoning.

- Uber Eats and UK AI Regulation: Natasha writes about how an Uber Eats courier’s fight against AI bias shows that justice under UK AI regulations is hard-won.

- EU guidelines on election security: The European Union on Tuesday published draft guidelines for election security aimed at the surrounding two dozen platforms regulated under it Digital Services Act, including guidelines related to preventing the spread of genetic disinformation by content recommendation algorithms (aka political deepfakes).

- Grok upgrades: X’s Grok chatbot will soon get an upgraded underlying model, Grok-1.5 — at the same time all Premium subscribers on X will get access to Grok. (Grok was previously exclusive to X Premium+ customers.)

- Adobe extends Firefly: This week, Adobe introduced Firefly Services, a set of more than 20 new productive and creative APIs, tools and services. It also launched Custom Models, which allows businesses to customize Firefly models based on their assets — part of Adobe’s new GenStudio suite.

More machine learning

How is the weather? Artificial intelligence can increasingly tell you this. I noted a few attempts at hourly, weekly, and century-long forecasting a few months ago, but like all things AI, the field is moving fast. The teams behind MetNet-3 and GraphCast have published a paper describing a new system called SEEDSfor Scalable Ensemble Envelope Diffusion Sampler.

Animation showing how more forecasts create a more even distribution of weather forecasts.

SEEDS uses diffusion to produce “ensembles” of plausible weather results for an area based on input (radar indications or orbital imagery perhaps) much faster than physics-based models. With larger set numbers, they can cover more edge cases (such as an event occurring in only 1 out of 100 possible scenarios) and be more confident about more likely situations.

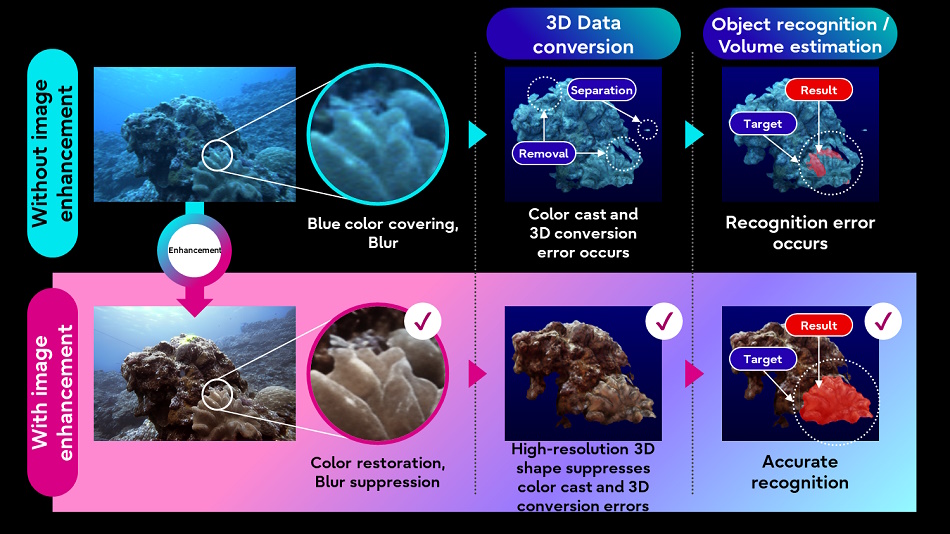

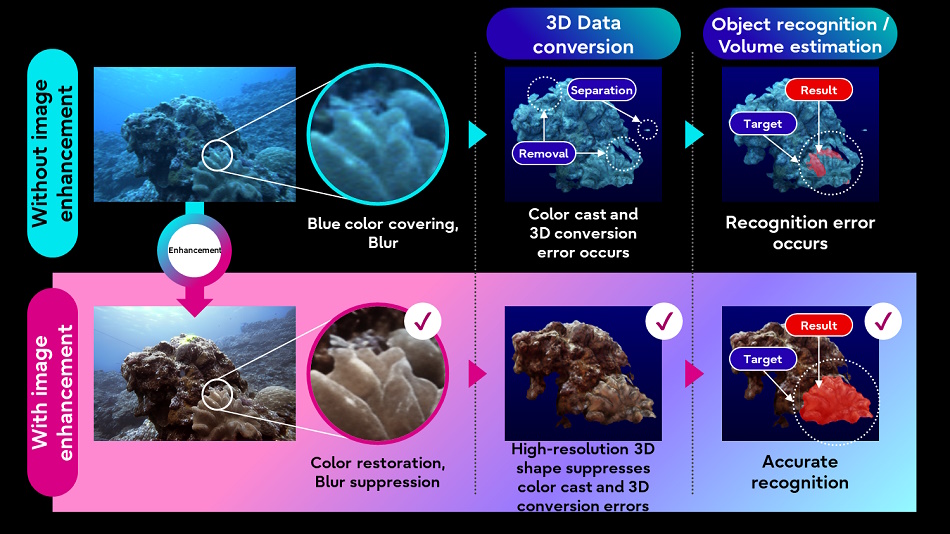

Fujitsu also hopes to better understand the physical world applying AI image manipulation techniques to underwater images and lidar data collected by underwater autonomous vehicles. Improving the quality of the images will allow other, less sophisticated processes (such as 3D conversion) to work better on the target data.

Image Credits: Fujitsu

The idea is to create a “digital twin” of water that can help simulate and predict new developments. We’re a long way from that, but you have to start somewhere.

Among the LLMs, the researchers found that they mimic intelligence with an even simpler method than expected: linear functions. Honestly the math is beyond me (vector stuff in many dimensions) but this writing at MIT makes it pretty clear that the recall mechanism for these models is pretty… basic.

Even though these models are really complex, non-linear functions that are trained on a lot of data and are very hard to understand, sometimes there are very simple mechanisms at work within them. This is an example of that,” said co-lead author Evan Hernandez. If you are more technical, see the paper here.

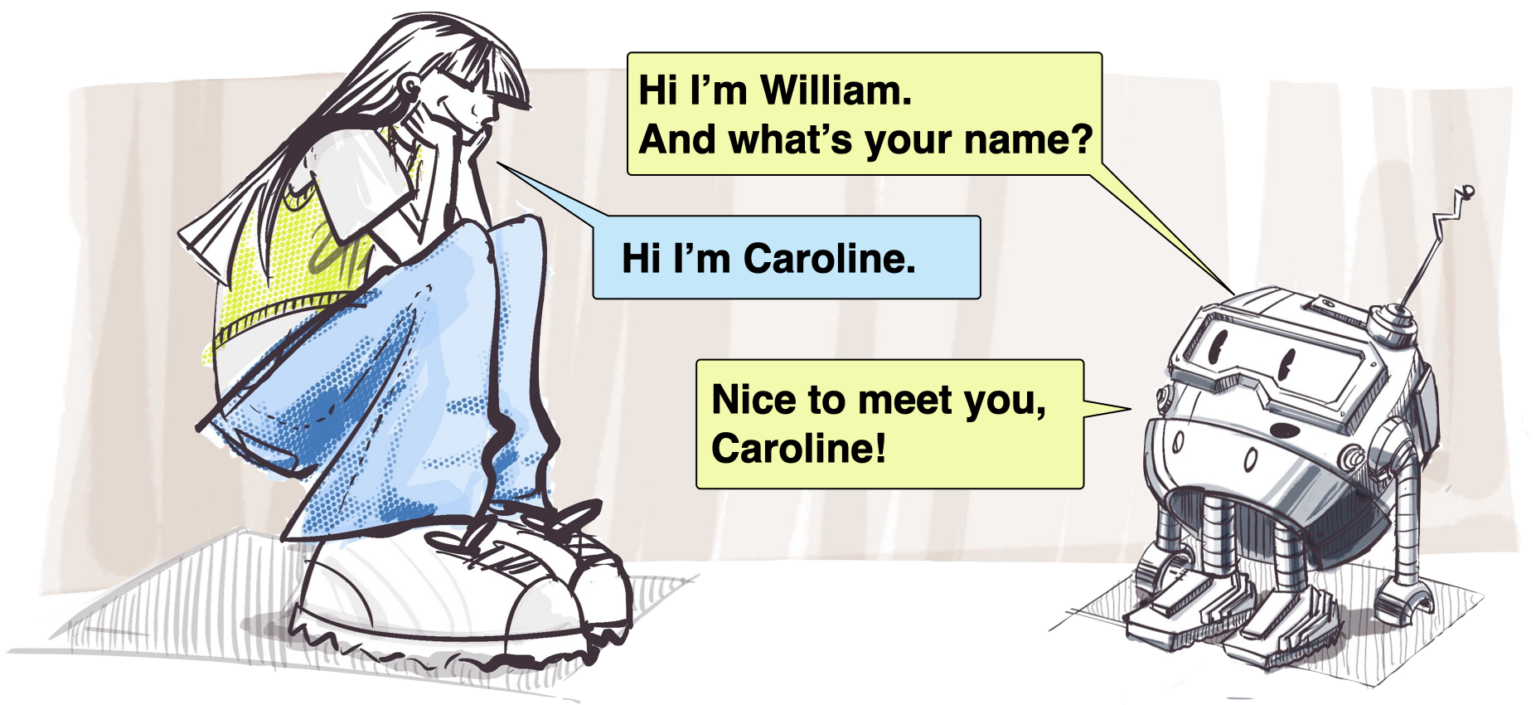

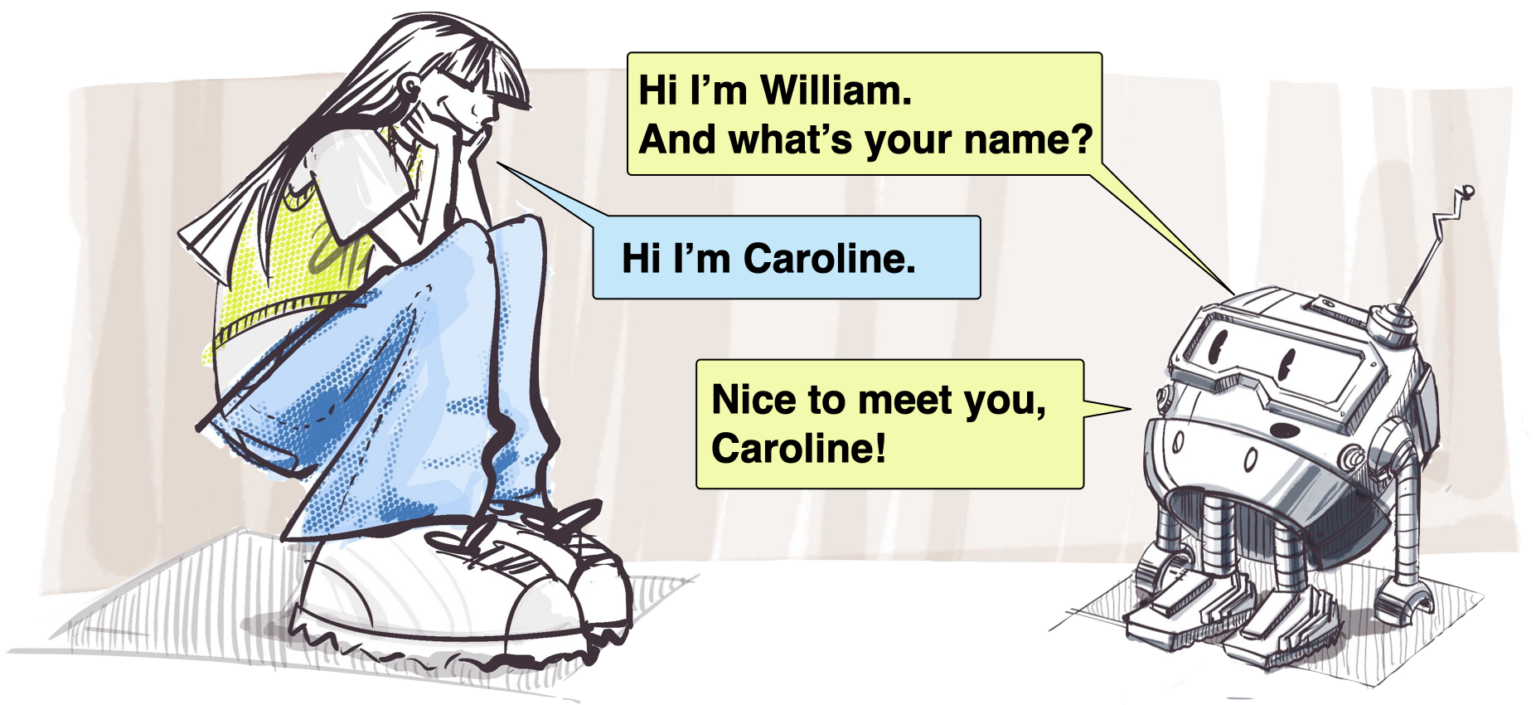

One way these models can fail is by not understanding context or feedback. Even a really competent LLM might not “get it” if you tell them your name is pronounced a certain way, since they don’t actually know or understand anything. In cases where this might be important, such as human-robot interactions, it could discourage humans if the robot acts in this way.

Disney Research has been researching automated character interactions for a long time and this name pronunciation and paper reuse appeared a while ago. It seems obvious, but extracting the phonemes when someone introduces themselves and encoding that rather than just the written name is a smart approach.

Image Credits: Disney Research

Finally, as AI and search increasingly overlap, it’s worth reassessing how these tools are used and whether there are any new risks presented by this unholy union. Safiya Umoja Noble has been an important voice in AI and search ethics for years, and her opinion is always enlightening. He did a nice interview with the UCLA news team about how her work has evolved and why we need to keep our cool when it comes to bias and bad research habits.